Abstract

Purpose

Robbers and thieves can strike unexpectedly, and they can catch you off guard even if you already have an emergency plan in place. During a Robbery, you may have no time to pick up your phone to call for an emergency. Even there has some emergency app that integrates all the functionalities within one app with one action, but pickup phone and opening these apps will consume time hence will alert that the robbery's attention.

SmartSOS AI System

With SmartSOS AI System, what to do during a Robbery? First, remain calm and simply raise up both hands. Then wave your hand left and right continues for more than 3sec, generally Wave Hand may likely draw the Robber's attention that doesn’t hurt you and you will follow the robber's direction. At the same time, SmartSOS AI system will be trigger immediately, the SOS alert message will send to the registered contacts with a track location map.

This AI system was embedded and designed with TensorFlow Lite TinyML Framework. Continuous motion recognition system was build and implement on Arduino Nano. X, Y, Z-axis Dataset was collected, simulate and calculate via Tensor lite flow framework to predict and analyze user’s hand movement. So, the SmartSOS system alert will not trigger when the user(s) are performing daily activities such as walking, eating, working, etc.ii) Android Mobile phones, Android 10.

iii) Android Studio

iv) edgeimpulse.com

v) Arduino IDE

ii) Arduino Nano BLE Sense 33 (B).

iii) Android SmartPhone.

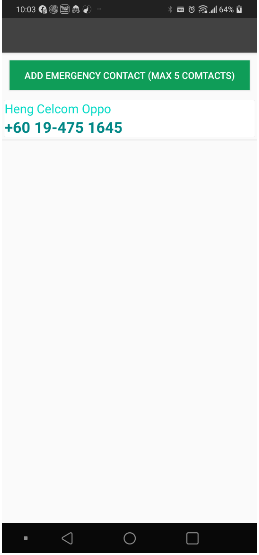

A) Select to connect Nano on Main Pages. B) Page for add emergency contact(s).

In the future, I hope that idea can be integrated and fully utilize on Android SmartWatch (instead of Arduino nano sense device ) to work with Android Phones.

Source Code

Image and icon: Link

Others App:

USB OTGChecker.

Comments

Post a Comment